Publisher: Supplier of LED Display Time: 2022-06-28 17:00 Views: 2233

Audio and video is a very fun and a technology that has been developed for a long time. Many technical knowledge now still uses the original. But the audio stuff is pretty fun. Let's take a look at the knowledge materials of audio and video by LCF LED display editor.

Audio and video development requires mastering the basic knowledge of images, videos, and audio, and learning how to capture, render, process, and transmit them in a series of development and applications.

Acquisition solves the problem of where the data comes from, rendering solves the problem of how new the data is, processing solves the problem of how to process the data, and transmission solves the problem of how to share the data. Each of the categories here can be dig deep, and a topic full of technical challenges can be derived.

1. Audio

Audio technology was invented for the purpose of recording, storing and playing back acoustic phenomena, so understanding acoustic phenomena first is a great help in learning digital audio.

(1) Basic knowledge of sound

The production of sound is a physical phenomenon that the human auditory nerve feels due to the vibration of the object, which causes the fluctuation of the air in the space to resonate and sound, and then spreads by the atmosphere.

The three elements of sound are loudness, pitch and timbre.

The loudness is related to the amplitude of the sound vibration. The greater the force, the greater the vibration amplitude of the human eardrum and the louder the sound.

Tone is mainly related to frequency. The higher the frequency of the sound wave, the higher the pitch.

Timbre At the same pitch (frequency) and loudness (amplitude), a piano and a violin sound completely different because they have different timbres.

The medium through which sound is transmitted is solid, liquid, and gas. Different media have different speeds of propagation. Sound cannot travel in a vacuum.

(2) Digital audio

Sampling the natural sound (analog signal), sampling is to digitize the signal on the time axis according to the Nyquist theorem, that is to take its instantaneous value point by point on the analog signal x(t) according to a certain time interval △t . The higher the sampling rate, the higher the restoration of the sound, the better the quality, and the larger the space occupied.

Quantization is to approximate the original continuously changing amplitude value with a finite number of amplitude values, and change the continuous amplitude of the analog signal into a finite number of discrete values with a certain interval.

Coding is to represent the quantized value in binary numbers according to certain rules, and then convert it into a binary or multi-valued digital signal stream. The digital signals thus obtained are transmitted through digital lines which can be passed through cables, satellite channels, etc. At the receiving end, the digitization process of the analog signal is reversed, and the original analog signal is restored after post-filtering.

The above digitization process is also called pulse code modulation. Usually, the raw data format of audio is pulse code modulation (PCM) data. Several quantization indicators are required to describe a piece of PCM data. Commonly used quantization indicators are sampling rate, bit depth, byte order, and number of channels.

Sample rate: How many times to sample per second, in Hz.

Bit-depth: Indicates how many binary bits are used to describe the sampled data, generally 16 bits.

Byte order: Indicates whether the byte order of audio PCM data storage is big-endian or little-endian. For efficient data processing, it is usually little-endian.

Channel number (channel number): The number of channels contained in the current PCM file, which is mono, dual

(3) Audio encoding

In terms of CD quality, the quantization format is 2 bytes, the sampling rate is 44100, and the number of channels is 2. These information describe the quality of the CD. Then the data sampling rate of CD = 44100*16*2=1378.125kbps, in 1 minute, the storage space required=1378.125 * 60/8/1024=10.09MB. Not small.

Compression algorithms include lossy compression and lossless compression.

Commonly used audio encoding methods are as follows:

MP3, MPEG-1 or MPEG-2 Audio Layer III, is a once very popular digital audio coding and lossy compression format designed to drastically reduce the amount of audio data.

AAC, Advanced Audio Coding, is an MPEG-2-based audio coding technology jointly developed by Fraunhofer IIS, Dolby Laboratories, AT&T, Sony and other companies and launched in 1997. AAC has a higher compression ratio than MP3, and the audio file of the same size, AAC has a higher sound quality.

WMA, Windows Media Audio, a digital audio compression format developed by Microsoft, itself includes lossy and lossless compression formats.

2. Video

Pixel: The screen display is to convert the effective area into many small grids, each grid only displays one color, which is the smallest element of imaging, so it is called "pixel".

Resolution: How many pixels the screen has in the two directions of length and width is called resolution, which is generally represented by AXB. The higher the resolution, the smaller the area of each pixel, the smoother and more delicate the display effect.

The RGB channels of each pixel are drawn to the screen corresponding to the sub-pixels at the screen position, thereby displaying the entire image.

(1) RGB represents the image

An image is drawn by each pixel, so how should the RGB of a pixel be represented?

floating point representation

Normalized representation, the value range is 0.0~1.0, such as the way OpenGL represents each sub-pixel point.

Integer representation

The value ranges from 0 to 255 or 00 to FF, and 8bit represents a sub-pixel.

For example, the image format RGBA_8888 means 4*8bit represents one pixel, while RGB_565 uses 5 + 6 + 5 bit to represent one pixel. The size of a 1280 * 720, RGBA_8888 format image = 1280 * 720 * 32bit = 1280 * 720 * 32 / 8 byte, which is also the size occupied by the bitmap in memory. So the naked data for each image is huge.

(2) YUV represents the image

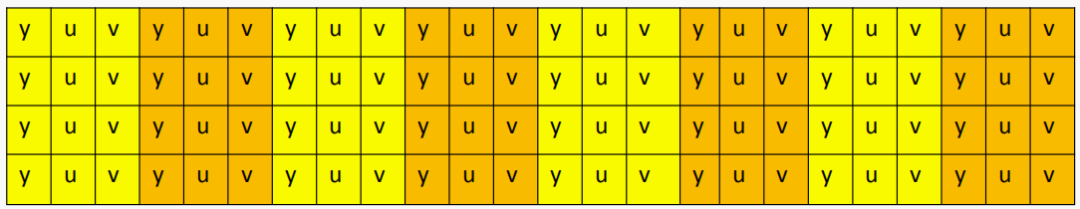

YUV is another color coding method. The naked data of the video is generally represented by the YUV data format. Y represents brightness, also called gray value (gray value). UY stands for chromaticity, both representing the color and saturation of the effect, and are used to specify the color of the pixel.

Luminance needs to be created from the RGB input signal by adding together specific parts of the RGB signal (the g-component signal).

Chroma defines the hue and saturation of a color, represented by Cr, Cb, respectively, (C stands for component (the abbreviation for component)). Cr reflects the difference between the red portion of the RGB input signal and the luminance value of the RGB signal. Cb reflects the difference between the blue portion of the RGB input signal and the luminance value of the RGB signal.

The reason why the raw data of the video frame adopts the YUV color space is that the luminance signal Y and the chrominance signal UV are separated. When there is no UV chrominance signal and only Y luminance signal, then the image represented in this way is a black and white grayscale image. Color TV uses YUV space to use Y luminance signal to solve the compatibility problem between color TV and black and white TV, so that black and white TV can also receive color TV signal. The most commonly used YUV is represented by 8 bytes, so the value range is 0~255.

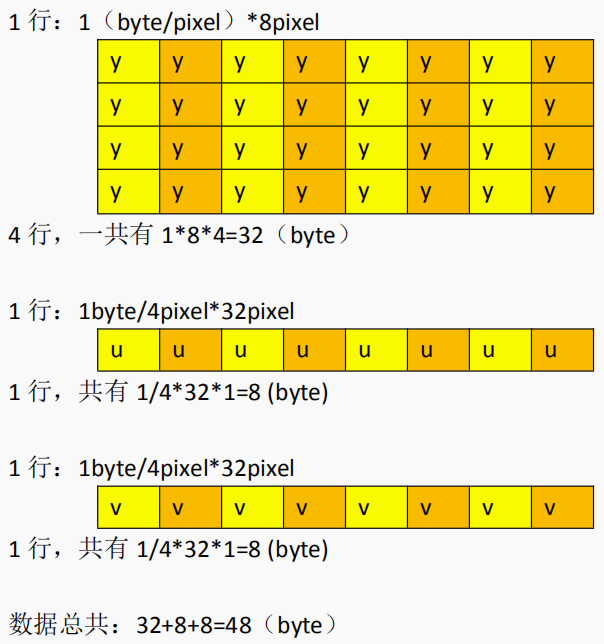

Audio needs to be sampled at first, and the same is true for images. The most common sampling format for YUV is 4:2:0.

There are two main categories of YUV formats: planar and packed

1. For the planar YUV format, the Y of all pixels is stored consecutively, followed by the U of all pixels, followed by the V of all pixels.

2. For packed YUV format, Y, U, V of each pixel are stored continuously. YUV420 (YUV420-Package), YUV image with a resolution of 84 (wh), the memory distribution is as follows.

YUV420P (YUV420-Planar) YUV image with a resolution of 84 (wh), the memory distribution is as follows.

The storage format of the YUV stream is actually closely related to its sampling method. There are three mainstream sampling methods, YUV4:4:4, YUV4:2:2, and YUV4:2:0.

YUV 4:4:4 sampling, each Y corresponds to a set of UV components. YUV 4:2:2 sampling, every two Ys share a set of UV components. YUV 4:2:0 sampling, every four Ys share a set of UV components.

3. YUV and RGB conversion

Everything rendered on the screen (text, pictures or others) must be converted to RGB representation, so how is the conversion between YUV representation and RGB representation?

In order to realize format conversion, we must first clarify the characteristics and mutual conversion relationship between the format to be converted and the target format, which is the core of programming to realize conversion. For the process of RGB to YUV conversion, we must first get the data of the RGB file, and then operate it through the YUV calculation formula in the above figure to obtain the YUV data, so as to realize the conversion. For YUV to RGB conversion, the YUV data must be obtained first, and the RGB data can be obtained by calculating the second set of RGB formulas. In this experiment, the conversion formula is as follows.

Y = 0.298R + 0.612G + 0.117B;

U = -0.168R - 0.330G + 0.498B + 128;

V = 0.449R - 0.435G - 0.083B + 128;

R = Y + 1.4075(V - 128);

G = Y - 0.3455(U - 128) - 0.7169(V - 128);

B = Y + 1.779(U - 128);

4. Video encoding

Why do we need to code? The reason is simple because the video data in YUV RGB form is too large. The purpose of encoding is to compress and make the volume of various videos smaller, which is conducive to storage and transmission.

The role of video coding: compress video pixel data (RGB, YUV, etc.) into a video stream, thereby reducing the amount of video data. There are several ways to encode video:

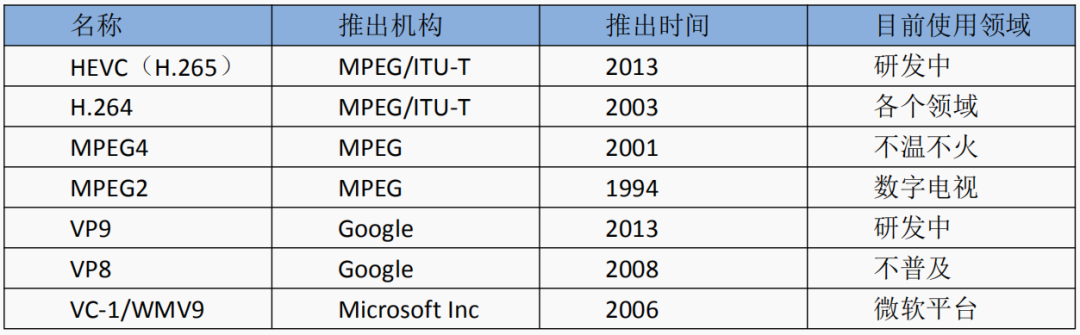

(5) Coding standard

There are two mainstream organizations in the world that formulate video codec technology, one is the "International Telecommunication Union (ITU-T)", which formulates standards such as H.261, H.263, H.263+, H.264, etc. The other is the "International Organization for Standardization (ISO)" which has established standards such as MPEG-1, MPEG-2, MPEG-4 and so on.

WMV is a streaming media format launched by Microsoft, which is an upgrade and extension of the "same door" ASF format. Under the same video quality, the WMV format file can be played while downloading, so it is very suitable for online playback and transmission.

VP8 comes from On2's WebM, VPX(VP6,VP7,VP8,VP9), this codec is designed for web video.

WebRTC, in May 2010, Google acquired VoIP software developer Global IP Solutions for approximately $68.2 million, and thus acquired the company's WebRTC technology. WebRTC integrates VP8, VP9.

AV1 is an open, patent-free video encoding format designed for video transmission over the Internet.

AVS is the second-generation source coding standard with independent intellectual property rights in China. It is the abbreviation of the "Advanced Audio and Video Coding for Information Technology" series of standards. Testing and other supporting standards.

H265 HEVC offers significant improvements in compression compared to the H.264 codec. HEVC compresses video twice as efficiently as H.264. With HEVC, video of the same visual quality takes up half the space.

VP9 is an open, royalty-free video coding standard developed by Google, and VP9 is also regarded as the next-generation video coding standard for VP8.

(6) H.265 and VP9

The comparison test of H.265 and VP9 encoding quality, the smaller the value, the better the encoding quality. From the comparison, we found that the difference between H.265 and VP9 is not big, the overall average score is only 0.001, and there is almost no difference in practical application.

In the encoding time comparison, VP9 completely beats H.265. Whether it is 4K video or 1920, 1280 resolution video, the encoding time of VP9 is much shorter than that of H.265. However, the decoding efficiency of H.265 is slightly higher than that of VP9.

H.265 inherits the video coding standard system of H.264 and is more widely used in commercial applications. It is mostly used in security, military administration, enterprise and other scenarios. However, due to its too many patent holders, its commercial costs are too high. Facing great resistance in promotion.

VP9 was developed by Google and is free to use. In the actual promotion, Microsoft, Apple and other companies do not want to see VP9 as the dominant player, and other Internet manufacturers do not want the mainstream video encoding format to be monopolized. Therefore, it is currently supported mainly in Google's own products, and other large companies using VP9. There are not many factories.

In the opinion of LCF LED display editor, at present, H.265 is widely used in enterprises and security, and VP9 is used in Internet application scenarios because of its simple and practical solutions and convenient development features. more.